AI: Spring Awakenings

Issue 150, March 7, 2024

We think the onset of Spring is a good time for house cleaning and taking a pause to reassess AI. It has taken over both the imagination and the anxiety of most anyone who has been paying attention. Take a straw poll and you may have two oppositional results. AI is a tool that will help human beings achieve higher levels of productivity and more efficient levels of profitability. Or AI is a threat to national security and humanity as it is the pathway to transcend human cognitive abilities and will supersede the human mind, ultimately taking over humanity.

We would argue that almost anyone who talks about AI does not have a firm grasp of what it is and all its variations, so the public AI narrative is more fiction than truth.

AI and Its Many Flavors

AI comes in many flavors including Machine Learning (ML) which is used to analyze large sets of data to derive patterns and learning. Then there are Chatbots: Open AI, Microsoft Co-Pilot, Google’s Gemini, Adobe’s Firefly — and so many other bots that have been introduced to the masses in nearly every application, website, or app. The AI bots aren’t the total picture, but they represent AI that the public most often experiences.

Two surveys by Consumer Reports (August 2023 and December 2023) report 33% of Americans say they used an AI chatbot in the past three months. ChatGPT was far and away the most common, with 19% of Americans having used it. Six percent used Bing AI and 4% used Google’s Bard (now named Gemini). That leaves 67% of the public who have not yet attempted to personally explore AI for their own purposes. Those who have used a chatbot have quickly found that their interactions (although somewhat entertaining) have had limited benefit in aiding them in work or their personal lives. Over the past week, The Washington Post, New York Times, and Wall Street Journal have written one or more articles helping readers understand AI and explaining where chatbots work (limitedly) and where they simply fail.

In terms of the hype across society and the marketplace — even the stock market — AI appears to be a second coming that will solve every one of our woes and needs. That hype comes despite the failures, errors, hallucinations and outright WTF moments resulting from its use.

Imagining the Future

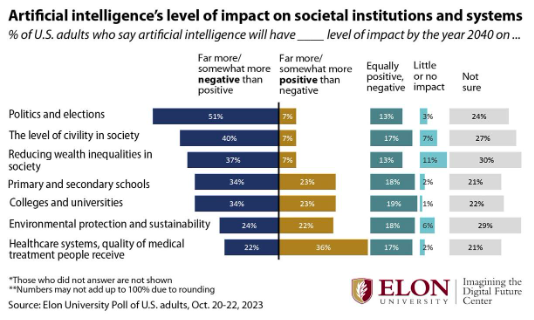

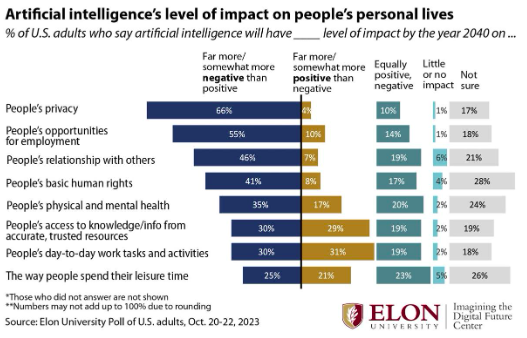

A research study recently published by Elon University, “Imagining the Digital Future,” reports “Americans are more likely than not to hope AI will improve the nation’s healthcare systems and the quality of medical treatment by 2040. Still, they have deep concerns that AI will negatively impact politics and elections and further erode the level of civility in society. They also are concerned that the level of wealth inequality will continue to grow.”

We are including two charts from Elon University’s research study to show the growing negative influence that respondents see AI brings to society. Change, particularly triggered by something with limited understanding, often causes more fear and apprehension than positive thinking. The results are revealing where perception of AI, algorithms and technology already seem to be downright responsible or a catalyst for the issues experienced by a polarized society. Will AI further erode our societal structure? Or will it indeed meet the promise many hoped for?

AI in the Last Five Months

GenAI is a form of AI many of us experience directly and has become the most recent darling and villain in the marketplace. Although AI/ML have been operating in our industries since the 1950s, OpenAI’s introduction of large language generative transformers has changed the level of the conversation, almost overnight. The New York Times reports, “Silicon Valley insiders believe that generative AI, the technology behind ChatGPT, is a once-in-a-generation technology that could transform the tech industry as thoroughly as web browsers did more than 30 years ago.”

In fewer than five months, Nvidia’s market cap has grown to $2.13 trillion briefly hitting $3 trillion just this past week. GenAI has spawned new language-model industries, competing to have a better solution available to organizations more quickly and effectively. Then Sora was introduced in February which leveled up the conversation with generative created video. It got Tyler Perry’s attention who stopped the expansion of his studios in Georgia.

And suddenly, it seemed the ethicists and moralists woke up with the introduction of GenAI as a tipping point of social media. Big tech was taken to task in a Senate hearing and Elon Musk (love him or hate him) is suing OpenAI (that he helped found) for breaching the trust of its mission. But typical Musk also started a new company entirely focused on AI. Talk about a contradiction … or another effort to get even more attention by demanding the media’s fascination with everything Musk.

So, for the record, let’s review recent developments.

Clarity

Any conversation about AI has to start with clarity of what AI is or is not. As we have written, GenAI is not a thing. It is not an entity. It is a tool programmed by humans and triggered by prompts from humans to churn out information distilled from the body of information originally created by humans. That information has come from “learning sets of data” that include the archives of The New York Times and many other publishers across the globe. The pattern is clear: Information is written by humans, all of whom have known and unknown biases. When GenAI coughs out garbage or seems to hallucinate, we must consider the sources that fed it in the first place. AI, by definition, is objective and incapable of independent reasoning, so it has to distill masses of information to find a logical answer across a diverse backdrop of biases. If you think about it, AI struggles to determine fact and truth based on often unreliable or unstable input and biased prompts. And all input and prompts are created by human beings.

Using Our Minds

Yes, but. AI has the uncanny ability to appear as though it has autonomy and agency when it hallucinates and mangles the facts with misinformation. We have pounded the pavement many times writing on the importance of using our minds. AI is deepening that necessity as its use by humans requires vigilance and critical thinking to discern truth from fiction. Those skills are not as commonly taught in our schools to help children learn how to think analytically. This is a subject for another day, but if we are educating a next-gen society without training them to know how to differentiate, GenAI may indeed succeed at a higher level of cognition than our kids.

AI Successes

AI/ML have been the backbone of many of our industries for decades. It enabled the RNA Covid vaccine breakthrough. It has unlocked tech solutions for supply chain management. It has propelled marketing to a more efficient level of designing systems to match products with customers. It reads X-rays better than technicians. It has sped up the transportation industry. It runs fast food systems, fertilizes crops in our farm fields, and creates sizing and fit solutions for the fashion industry. There’s a good chance that everything you use every day has been touched by AI/ML. Just ask Siri or Alexa, your personal AI instruments [powered by AI and filled with information about AI.

Calculated Risk

When speculating about the potential of AI, it’s useful to go to an expert. As reported by David Marchese in The New York Times, “45-year-old computer scientist Yejin Choi, a 2022 recipient of the prestigious MacArthur genius grant, has been doing groundbreaking research on developing common sense and ethical reasoning in AI.” She said, “There is a bit of hype around AI potential, as well as AI fear. It has the feeling of adventure,” when asked if humans will ever create sentient intelligence. She adds, “Currently I am skeptical. I can see that some people might have that impression, but when you work so close to AI, you see a lot of limitations. Whenever there are a lot of patterns and a lot of data, AI is very good at processing that — certain things like the game of Go or chess. But humans have this tendency to believe that if AI can do something smart like translation or chess, then it must be really good at all the easy stuff too. The truth is, what’s easy for machines can be hard for humans and vice versa. You’d be surprised how AI struggles with basic common sense.”

Quartz adds, “Unlike humans, who have both conscious and subconscious thought processes, AI mostly uses statistical and symbolic reasoning. So, it struggles with tasks that require intuition, gut feeling, and implicit knowledge, which often inform human critical thinking and emotional intelligence. Those traits will continue to be irreplaceable in the evolving job market.”

Blunders

As reported recently by CNBC, “Google CEO Sundar Pichai said that the company is working around the clock on a fix for its artificial intelligence image generator tool. He said ‘the company is creating new processes for AI product launches. We have offended our users and shown bias.’”

The report adds, “The image generator tool allows users to enter prompts to create an image. Over the past week, users discovered historical inaccuracies that went viral online, and the company pulled the feature last week, saying it would relaunch it in the coming weeks.” Pichai said, “Our mission to organize the world’s information and make it universally accessible and useful is sacrosanct. We’ve always sought to give users helpful, accurate, and unbiased information about our products. We’ll be driving a clear set of actions, including structural changes, updated product guidelines, improved launch processes, robust evals and red-teaming, and technical recommendations. We are looking across all of this and will make the necessary changes.”

In older news, CEOs of Meta, TikTok, X, Snap and Discord testified before the Senate Judiciary Committee in January on safety risks for children on their platforms. After hours of testimony from parents who lost their children or were exploited by social media, Mark Zuckerberg caved and addressed parents saying, “I’m sorry for everything you have all been through. No one should go through the things that your families have suffered.” Apologies are kind, but corporate policies and guardrails (or lack thereof) are what will change the dynamic of social media and protect users.

And most recently, Elon Musk is suing $80 billion valuation OpenAI and founder Sam Altman “alleging they broke the artificial-intelligence company’s founding agreement by giving priority to profit over the benefits to humanity.” The New York Times reports that “the 35-page lawsuit is the latest chapter in a fight between the former business partners that has been simmering for years, and it homes in on unresolved questions in the AI community: Will artificial intelligence improve the world or destroy it, and should it be tightly controlled or set free?”

Musk has a history of being contentious with big tech (even though he is one of them) arguing their potentially catastrophic consequences for humanity. As reported by the Wall Street Journal, Musk’s issue with founders Altman and Greg Brockman is that they originally agreed to pursue the nonprofit approach “for the benefit of humanity, and not any single company.”

In one of the most absurdist examples of the dangers of GenAI, The Washington Post reports that “dignifAI refers to the use of artificial intelligence to class up a person’s image via removing piercings, raising necklines, lowering hems, etc. Wardrobe transformations are, it turns out, the least of dignifAI’s concerns. The participants are actually interested in lifestyle transplants. Women who were once necklace-free are suddenly wearing crosses. A box of cigarettes is transformed into a homemade quiche.” Honestly, what does this obsessive behavior really say about the human condition?

To the Future and Beyond

With the introduction of Sora, Open AI’s generative video tool, we embark into another “Alice in Wonderland” AI world. In OpenAI’s words, “We’re teaching AI to understand and simulate the physical world in motion with the goal of training models that help people solve problems that require real-world interaction. Sora can generate videos up to a minute long while maintaining quality and adherence to the user’s prompt.” OpenAI is being proactive revealing, “We’re sharing our research progress early to start working with and getting feedback from people outside of OpenAI. “

This is either the most amazing news of 2024 or just another game-changing development in AI. But imagine the consequences of human-prompted video of completely falsified events that could be mistaken on digital feeds as the real thing. As Open AI says, “Despite extensive research and testing, we cannot predict all of the beneficial ways people will use our technology, nor all the ways people will abuse it.”

Last Words

At the core of any AI discussion is the need for guardrails to keep the harmful results of AI in check. If only we were a species with a default for noble, ethical decision-making dedicated to the common good, not national, or personal gain. We are at another one of those hinge points in history when the decisions we make now are going to forever shape our future. Axios reports a recent Edelman survey and globally, trust in AI companies has dropped to 53%, down from 61% five years ago. In the U.S., trust has dropped 15 points from 50% to 35%.

In the short term, the proliferation of these technologies has prompted new job expertise to discern the real from the false, in both language and images. Think: Chief AI Officer, AI project teams, AI ethics committees, Chair of AI and Digital Transformation. It’s manifest destiny for AI. It’s our job to understand its capabilities and develop the skills and talent to manage it.

Get “The Truth about Transformation”

The 2040 construct to change and transformation. What’s the biggest reason organizations fail? They don’t honor, respect, and acknowledge the human factor. We have compiled a playbook for organizations of all sizes to consider all the elements that comprise change and we have included some provocative case studies that illustrate how transformation can quickly derail.

The 2040 construct to change and transformation. What’s the biggest reason organizations fail? They don’t honor, respect, and acknowledge the human factor. We have compiled a playbook for organizations of all sizes to consider all the elements that comprise change and we have included some provocative case studies that illustrate how transformation can quickly derail.