The Future of Personal Agency

Issue 102, April 6, 2023

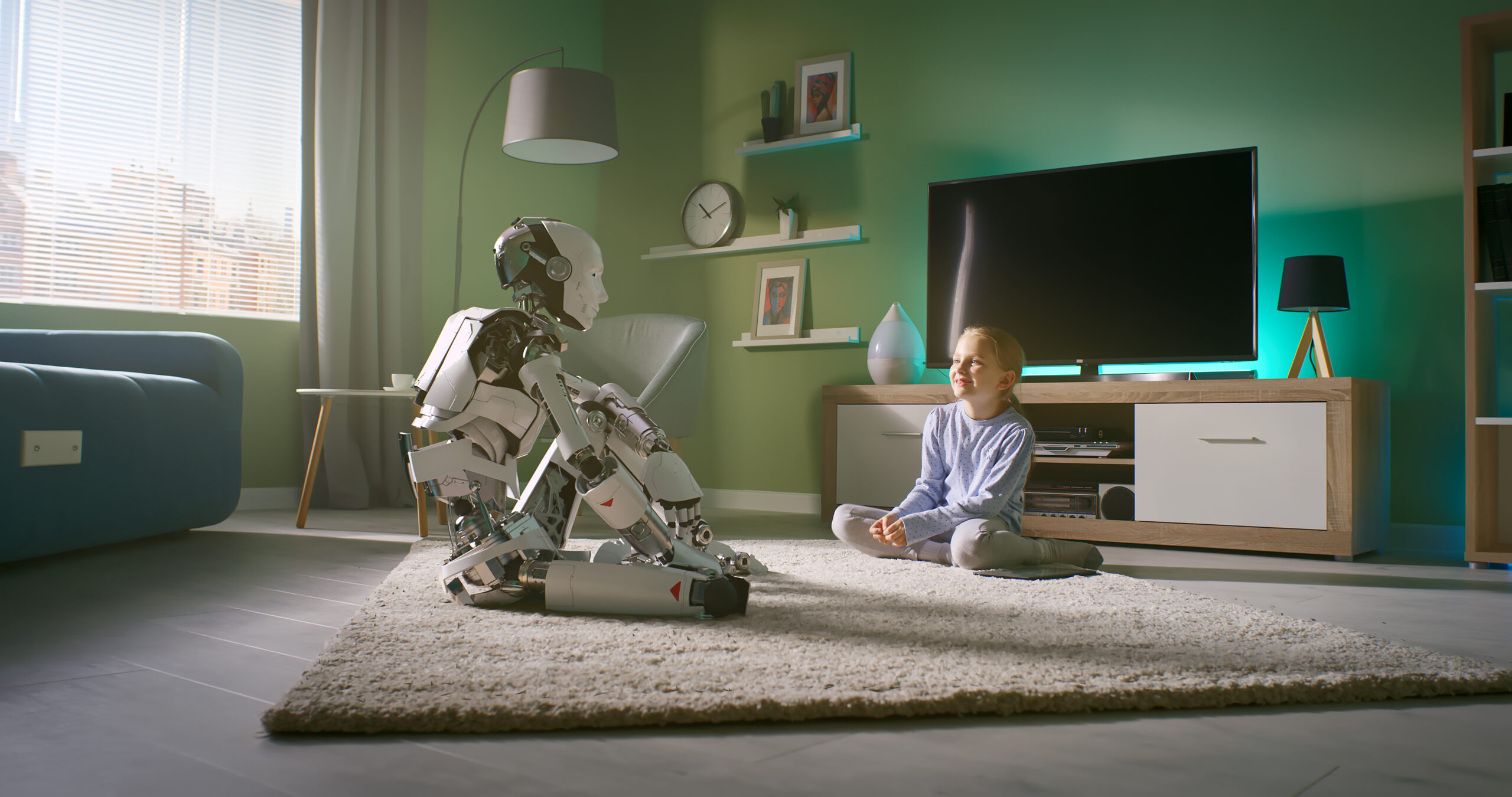

It can’t come as a surprise that many people feel they are losing their sense of personal agency in the face of such rapid technological change, global disruptions and polarized social and political factions. Personal agency? It’s a “sense that I am the one who is causing or generating an action. A person with a sense of personal agency perceives himself/herself as the subject influencing his/her own actions and life circumstances,” according to Springer. And Wiki defines self-agency that is also known as “the phenomenal will” — the sense that actions are self-generated. Scientist Benjamin Libet was the first to study it, concluding that “brain activity predicts the action before one even has conscious awareness of his or her intention to act upon that action.” And that ability to predict is precisely how we have created AI, mimicking our brain function.

How does this compromised feeling of personal agency show up in life? Consider some of these (mostly) dismal scenarios based on recent events:

- Self-worth: Teens are bullied on social networks, some resulting in self-harm and suicide.

- Shame: Young people with disabilities are made fun of on social networks with devastating emotional and psychological results.

- Disintermediation: Professional writers of content (advertising, marketing, journalism) are facing irrelevance in the face of advanced artificial language programs.

- Out of Service: Customer service agents are being replaced by AI chatbots.

- Legal Loss: The work of paralegals and legal assistants are likely to be automated.

- Trust: News and information that we rely on to make decisions are generated by AI, often minimally vetted.

- MedTech: Radiologists will be eliminated for medical image interpretation by AI.

- Accounting: CPAs and other accounting professionals are predicted to become irrelevant as AI can do the “work” they once did.

- Analysts: Market researchers are looking at AI for collecting data, identifying trends, and then using what it finds to design marketing campaigns or advertising placements.

- Knowledge: Students are already finding ways for AI to do their homework and writing papers leaving them with a deficit of knowledge and skills.

- Automated Design: The DALL-E tool is disrupting the graphic design field by generating acceptable images within seconds.

Agency at Risk

Lee Rainie and Janna Anderson from Pew Research recently conducted a major study on personal agency to explore how much control people will retain over essential decision-making as digital systems and AI advance. The study reports that powerful corporate and government authorities will expand the role of AI in people’s daily lives in useful ways. But many worry these systems will diminish individuals’ ability to control their choices. The study reveals that some analysts have concerns about how business, government and social systems are becoming more automated and they fear humans are losing the ability to exercise judgment and make decisions independent of these systems.

Over the past several weeks, many individuals and groups have created petitions, and open letters to companies and the government seeking an AI pause for a minimum of six months to allow reflection and closer consideration of the consequences it may bring to society as a whole.

Elon Musk, who is known to be a trailblazer and a risk taker, was one of the original founders of OpenAI. He resigned from its board when he felt the organization was taking a path he didn’t agree with. He has been publicly communicating to anyone who might listen that a societal AI pause is needed. Even Musk sees a potential threat to human agency.

Sam Altman, the founder of OpenAI and the creator of ChatGPT shared in a wide-ranging interview recently in the Wall Street Journal that “if you’re making nuclear fusion, it’s all upside. It’s just good,” he said. “If you’re making AI, it is potentially very good, potentially very terrible.”

Altman’s original goal, according to the WSJ, is to forge a new world order in which machines free people to pursue more creative work. In his vision, universal basic income—the concept of a cash stipend for everyone, no strings attached—helps compensate for jobs replaced by AI. The Journal reported that Altman even thinks that humanity will love AI so much that an advanced chatbot could represent “an extension of your will.” He seems to be reconsidering his vision given the runaway train iterative AI has become.

So, now consider that Microsoft has announced that ChatGPT will be embedded in the Microsoft Office Suite. Canva has incorporated ChatGPT in its design platform, Adobe is doing the same and last week ChatGPT released a long list of “plug-ins” for major software companies to incorporate, many of the tools and platforms you may use professionally and personally on a routine basis. Literally overnight, whether society wanted it or not, AI is front and center touching many aspects of one’s life.

The Pew study describes our current moment as a turning point that “will determine a great deal about the authority, autonomy and agency of humans as the use of digital technology spreads into more aspects of daily life.” To dig deeper, this raises the existential question, “What is it to be human in a digital age?” And that is quickly followed by questioning what things humans really want agency over. With AI embedded in our daily lives, when will people feel comfortable turning to AI to help them make decisions? And the study raises the question, “Under what circumstances will humans be willing to outsource decisions altogether to digital systems?” Whether it’s reality or perception, threats to who we are as humans and what we believe we can control are permeating our society.

2035 on the Horizon

At the core of the study is the prediction that smart machines, bots and systems powered mostly by machine learning and artificial intelligence will quickly increase in speed and sophistication between now and 2035. According to the study, 56% of the experts canvassed agreed that by 2035 smart machines, bots and systems WILL NOT be designed to allow humans to easily be in control of most tech-aided decision-making relevant to their lives. Among the most common themes they expressed:

- Powerful interests have little incentive to honor human agency.

- Humans value convenience and will continue to allow black-box systems to make decisions for them.

- AI technology’s scope, complexity, cost ,and rapid evolution are just too confusing and overwhelming to enable users to assert agency.

On the flip side, 44% of the experts canvassed agreed with the statement that smart machines, bots and systems WILL be designed to allow humans to easily be in control of most tech-aided decision-making relevant to their lives. Among the most common themes they expressed:

- Humans and tech always positively evolve.

- Businesses will protect human agency because the marketplace demands it.

- The future will feature both more and less human agency, and some advantages will be clear.

Synergy

To add to the dilemma of how AI and humans will co-exist (both positively and negatively), here is a thought-provoking development. Individuals have a long history of training their replacements, but this is a dramatic twist. Axios recently reported that the artificial intelligence job market is so hot that it is offering salaries of up to $335,000 a year to help sharpen the technology of the future. Anthropic, an AI company backed by Alphabet, is advertising for the role of prompt engineer with sky-high pay. But this position reaches outside of coding. According to Axios, prompt engineers are like “AI whisperers, and will come from a history, philosophy, or English language background, because it’s wordplay. The job is to try to distill the essence or meaning of something into a limited number of words.” These AI whisperers are tasked with writing prompts to teach AIs like ChatGPT to produce smarter results and help train companies on how to best use AI. Finally, a role for humanities students in an AI infused society!

Discussion Points

The Pew study reveals key issues that we recommend you use as discussion points with your workforce – both management and frontline. These perspectives will prepare your organization for a future in which AI operates alongside humans, ultimately impacting all your stakeholders. These issues support both positive and less optimistic scenarios of the power and influence of AI; some are controversial, some are predictive, and most are provocative. AI is out of the barn, so better to be prepared than to be blindsided. It seems clear we are all going to go on this journey together and learn along the way, hopefully in good, consequential ways, before we critically assess what the future of AI and human beings may be.

- The success of AI systems will remain constrained due to their inherent complexity, security, vulnerabilities and the tension between targeted personalization and privacy.

- Society will no longer be human but instead socio-technical: Without technology there would be no “society” as we know it.

- People tend to be submissive to machines or any source of authority. Most people don’t like to think for themselves but rather like the illusion that they are thinking for themselves.

- Machines allow “guilt-free decision-making.”

- Machines that think could lead us to become humans who don’t think.

- The bubble of algorithmically protected comfort will force us to have to find new ways to look beyond ourselves and roll the dice of life.

- Digital tools to support decision-making are upgrades of old-fashioned bureaucracies; we turn over our agency to others to navigate our limitations.

- Humans will be augmented by autonomous systems that resolve complex problems and provide relevant data for informed decisions.

- Digital systems will let those willing to adopt them live a life of “luxury,” assuming subservient roles and freeing users of many tedious chores.

- In order for Big Tech to choose to design technologies that augment human control, the incentives structure would have to be changed from profit to mutual flourishing.

- The standardization of routine decisions as AI takes them over will make many of them more reliable, easy to justify and more consistent across people.

- Industry needs open protocols that allow users to manage decisions and data to provide transparent information that empowers them to know what the tech is doing.

- AI will be built into so many systems that it will be hard to draw a line between machine decisions and human decisions.

- Major tech-driven decisions affecting the rest of us are being made by smaller and smaller groups of humans.

- When choice is diminished, we impede our ability to adapt and progress.

- Whoever writes the code controls the decision-making and its effects on society.

Why Personal Agency Matters

At 2040, we work with clients to support and strengthen the practice of critical thinking throughout the organization. This is one of those crucible moments when critical thinking is just that, critical.

Thriving in an era of extreme digital and technological advances requires more agency, not less. We need the ability to “cut through all of what pulls at us, find emotional and physical balance, think more clearly, and advocate for ourselves so we can take a course of action that makes sense. With agency, we can feel more in command of our lives,” according to Mindful.

This is not psychobabble; personal agency furthers self-efficacy, awareness and esteem. Personal agency is supported by critical thinking and setting goals and taking action to achieve them. People with high agency feel a sense of control over their lives, and they can make decisions about what they want or need and act to meet those needs. And that is a formula for a healthy organization that has shared purpose in its work to achieve success, both personal and professional.

Get “The Truth about Transformation”

The 2040 construct to change and transformation. What’s the biggest reason organizations fail? They don’t honor, respect, and acknowledge the human factor. We have compiled a playbook for organizations of all sizes to consider all the elements that comprise change and we have included some provocative case studies that illustrate how transformation can quickly derail.

The 2040 construct to change and transformation. What’s the biggest reason organizations fail? They don’t honor, respect, and acknowledge the human factor. We have compiled a playbook for organizations of all sizes to consider all the elements that comprise change and we have included some provocative case studies that illustrate how transformation can quickly derail.